How easy it is to manipulate us. Analyzing comments to humorous TikTok deepfakes

So far, only fraudsters, propagandists, and comedians have benefited the most from the technology of deepfakes. And while the first two categories seem to be clear, the latter reminds us once again that even a low-quality, obviously fake diplomat still finds those who take it seriously. This means that fraudsters and propagandists are doing quite well.

We have downloaded more than 10 thousand comments to four popular TikTok videos on a channel that makes primitive comic videos about politicians "on the front lines" using the technology of deepfakes. All this is to try to understand what share of commenters took these videos at face value. And the results are not very encouraging.

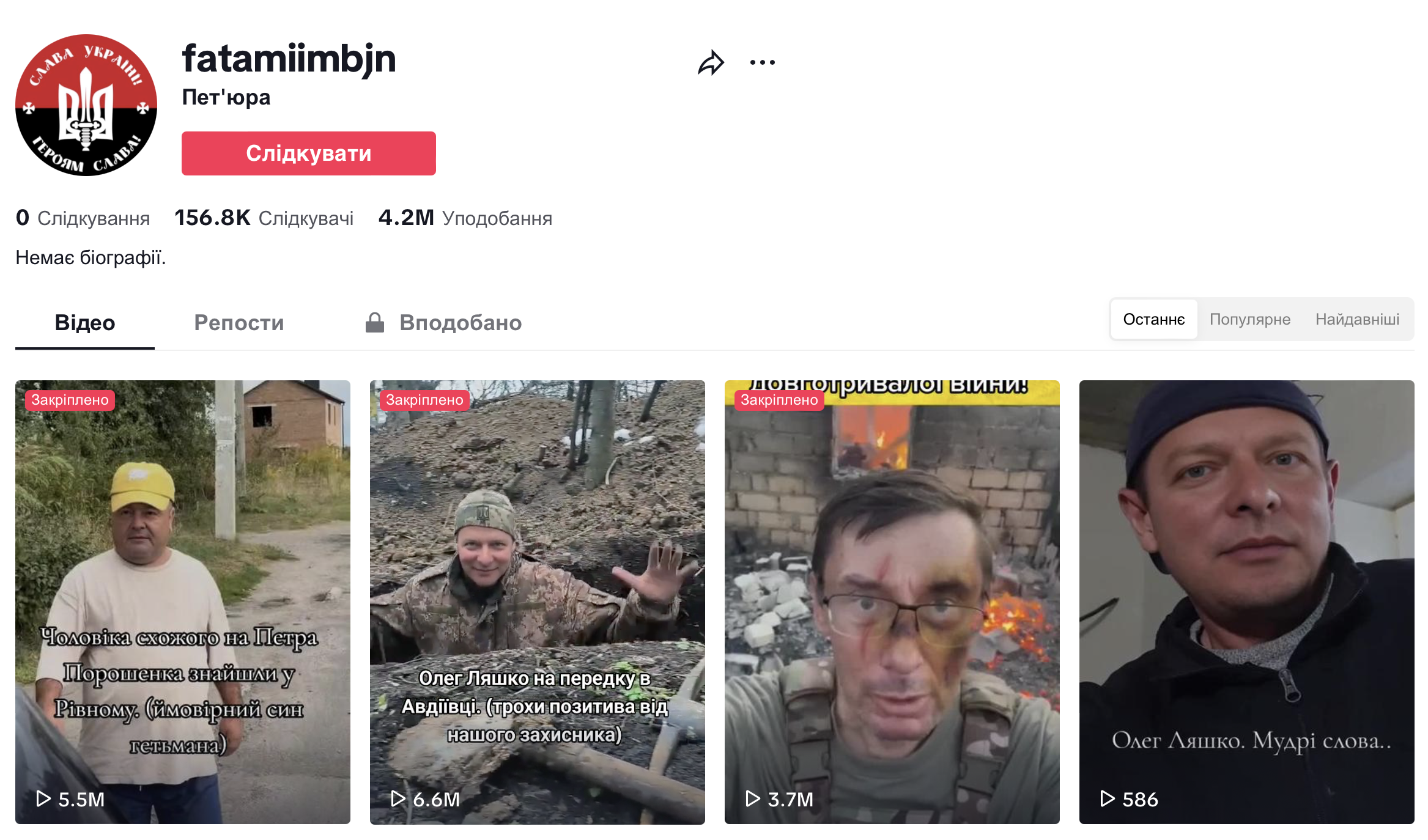

Since the beginning of 2023, the ironic tiktok channel @fatamiimbjn ("Petyura") has been regularly posting humorous diplomacy videos starring politicians. Primitively made, often with obvious signs of an artificial mask "worn" by actual military personnel, these videos have gained the author more than 150,000 followers and 4.2 million likes in more than a year and a half. All this thanks to the capabilities of AI and jokes using the masks of Petro Poroshenko, Yuriy Lutsenko, Oleh Liashko, Arseniy Yatsenyuk, and other characters of Ukrainian political life in the pre-digital period.

The peculiarities of TikTok algorithms take the channel's content beyond the circle of its subscribers. Some videos go viral and get millions of views and thousands of comments, including from people who are not familiar with other content on the channel and therefore risk taking the humorous fakes seriously. And they do.

To understand the scale of the problem and whether we are really that easy to manipulate, we downloaded all the comments from the four most popular videos on the TikTok channel with fake Oleh Liashko and Yuriy Lutsenko in the lead roles somewhere "at the front." And then we tried to manually classify all 10,000 comments to separate those where commenters got the joke from those where commenters seemed to take the video seriously.

Preview of the videos under study:

"Don't blink so much, bro"

We'd like to draw your attention to the fact that almost all of the videos on this channel bear the characteristic signs of fake news. This means that even without knowing what kind of channel it is and the context, an attentive observer has clues to distrust either the video itself or the text to it.

Here are some of them:

- when the head position changes, the eyes look at the camera and hardly move;

- the mask is very poor at blinking (so characters often don't blink at all or blink extremely unnaturally);

- the face may move or be crookedly attached to the head, especially when moving;

- the main character is usually silent, and if he/she speaks, the discrepancy between the soundtrack and the video is striking;

- the mask can be created on the basis of old photographs, which is why attentive commentators sometimes pointed out that Lyashko has "rejuvenated";

- and the body itself often signals that it definitely does not belong to the person the channel creator is trying to pass off as; the man in the video may be too tall, too short, of a completely different weight category, etc.

"Everyone laughed at him, but he... The war showed who was who"

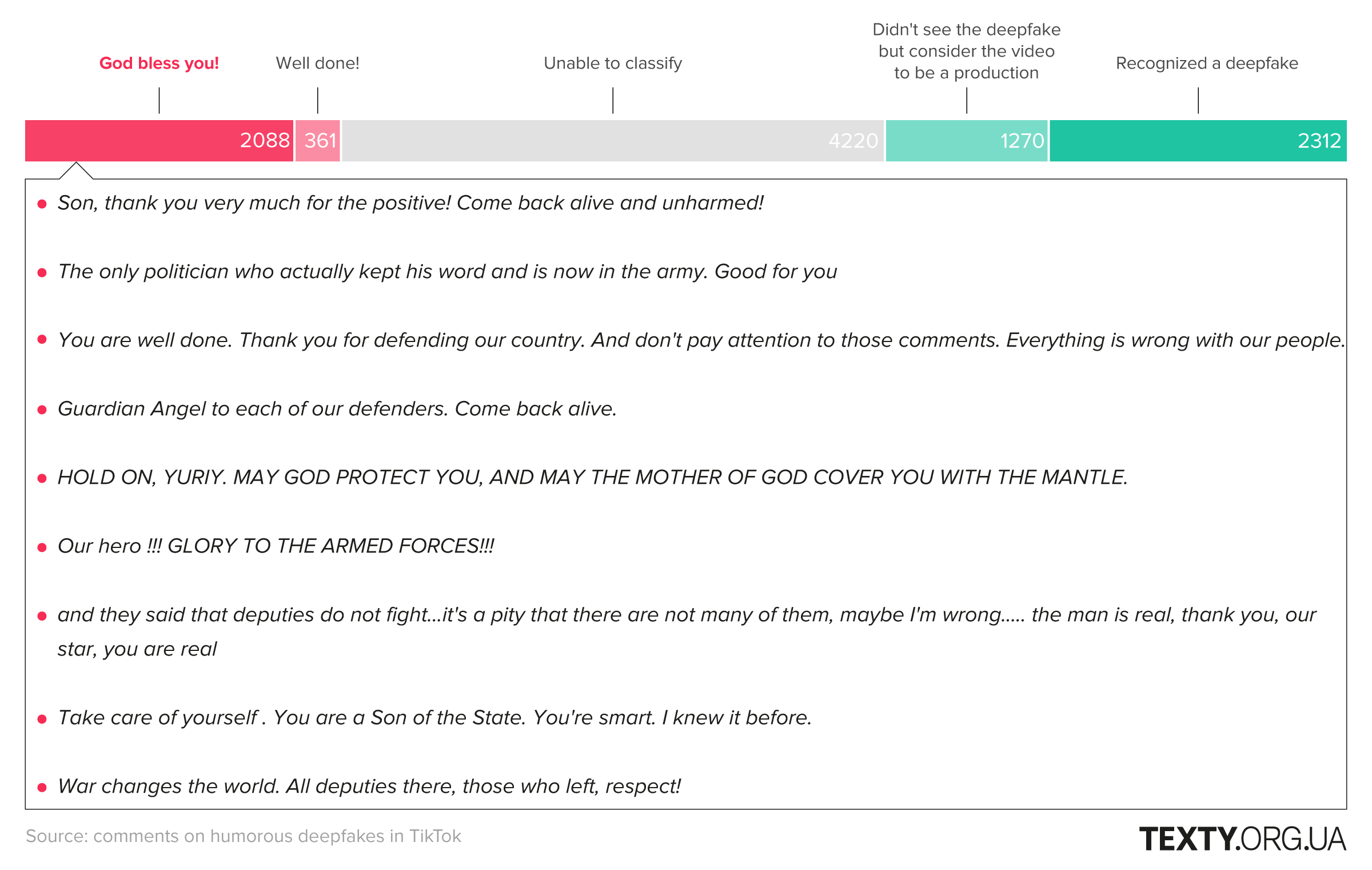

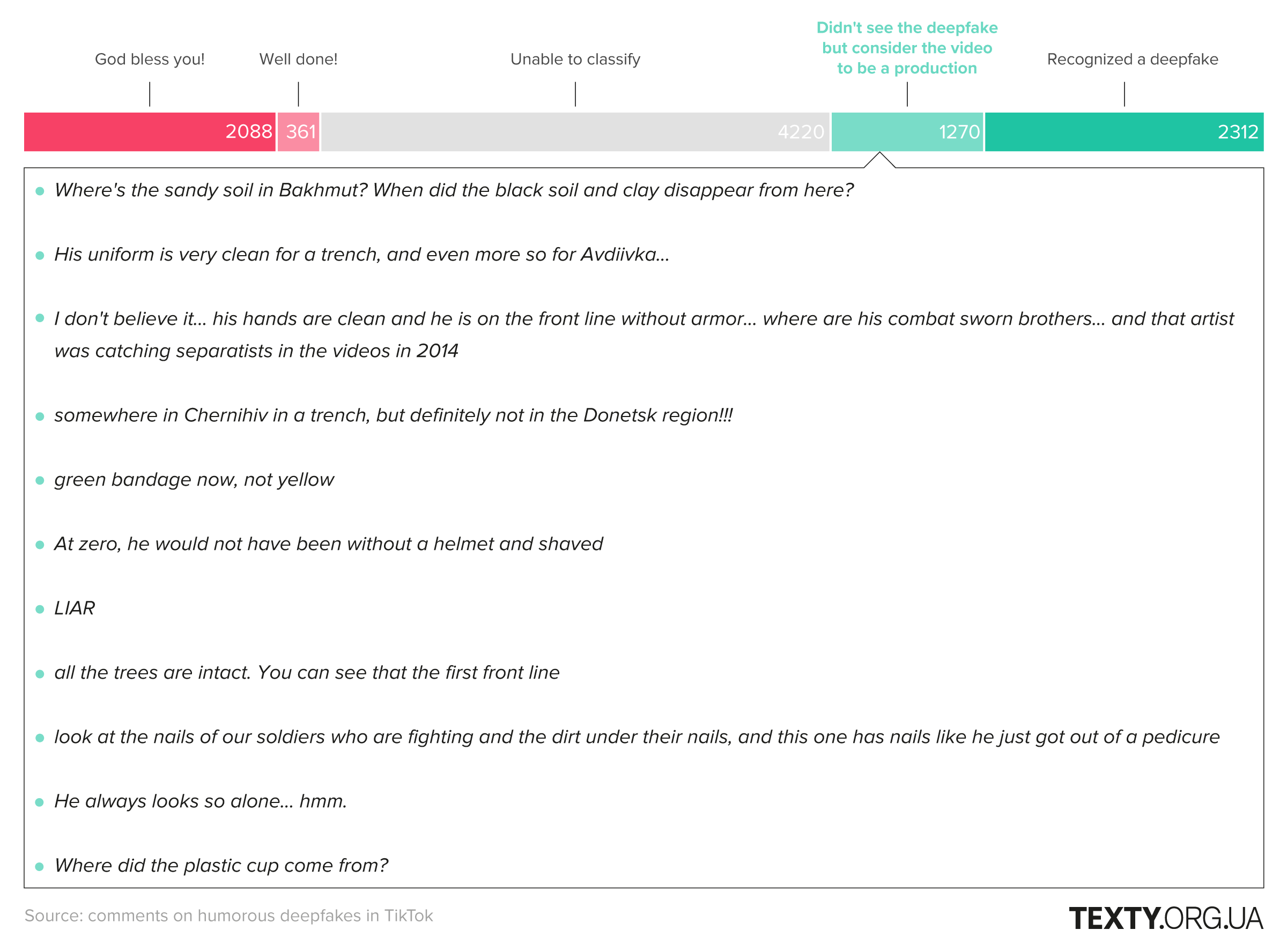

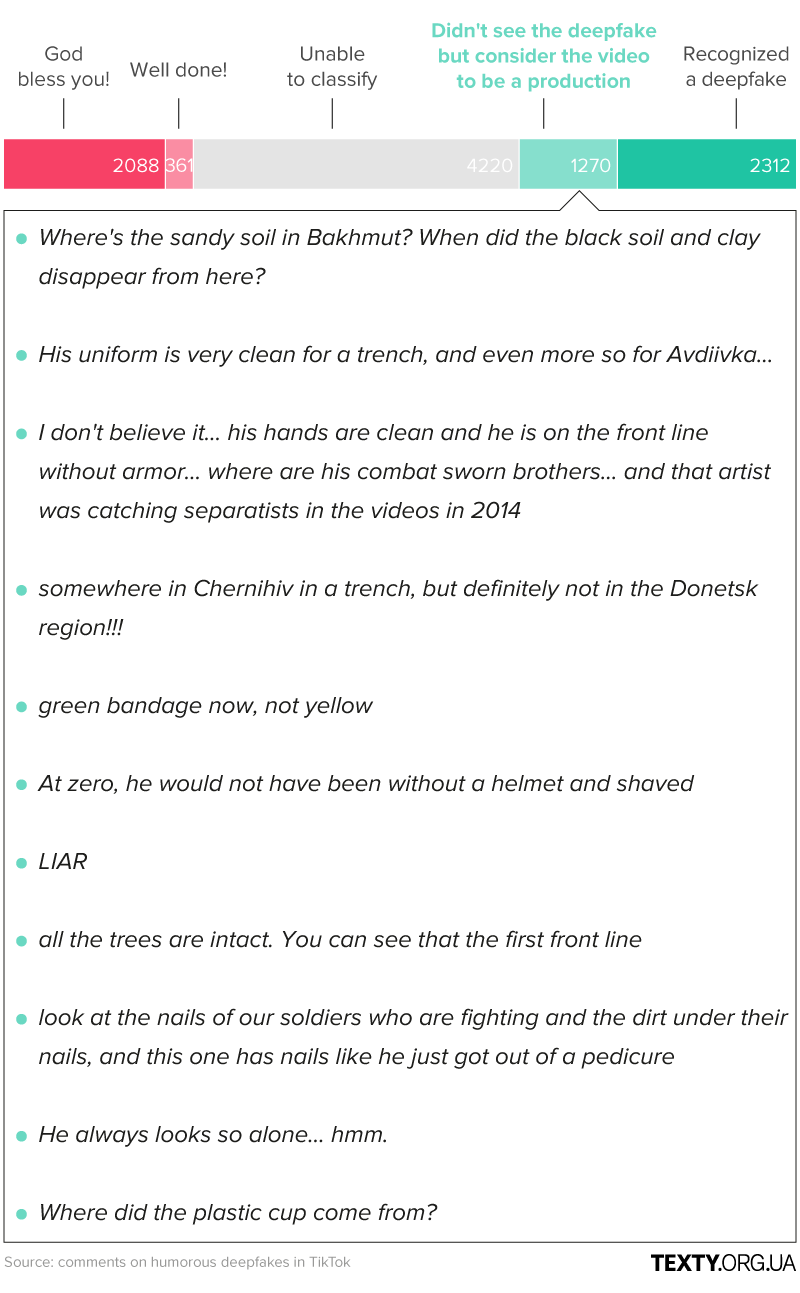

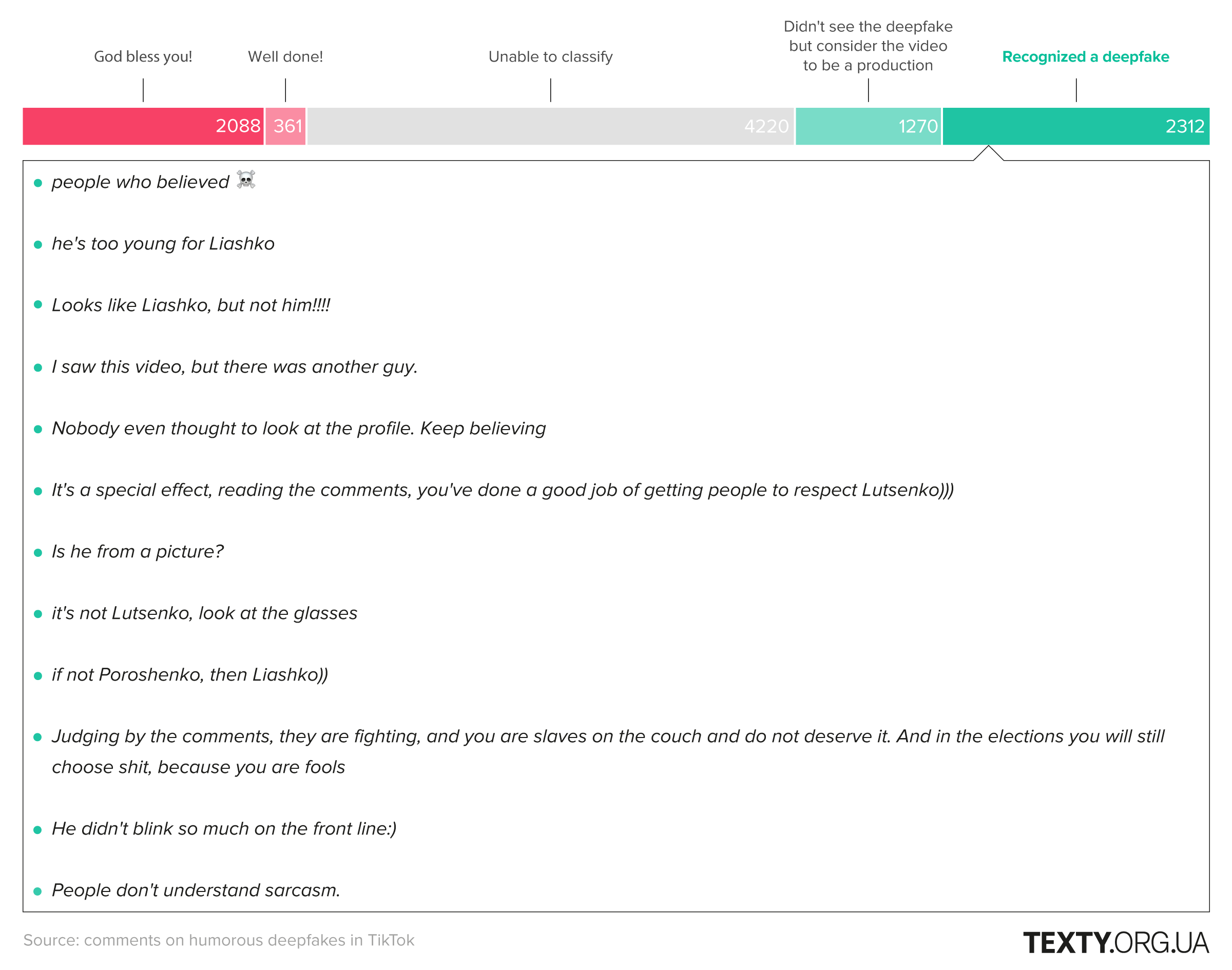

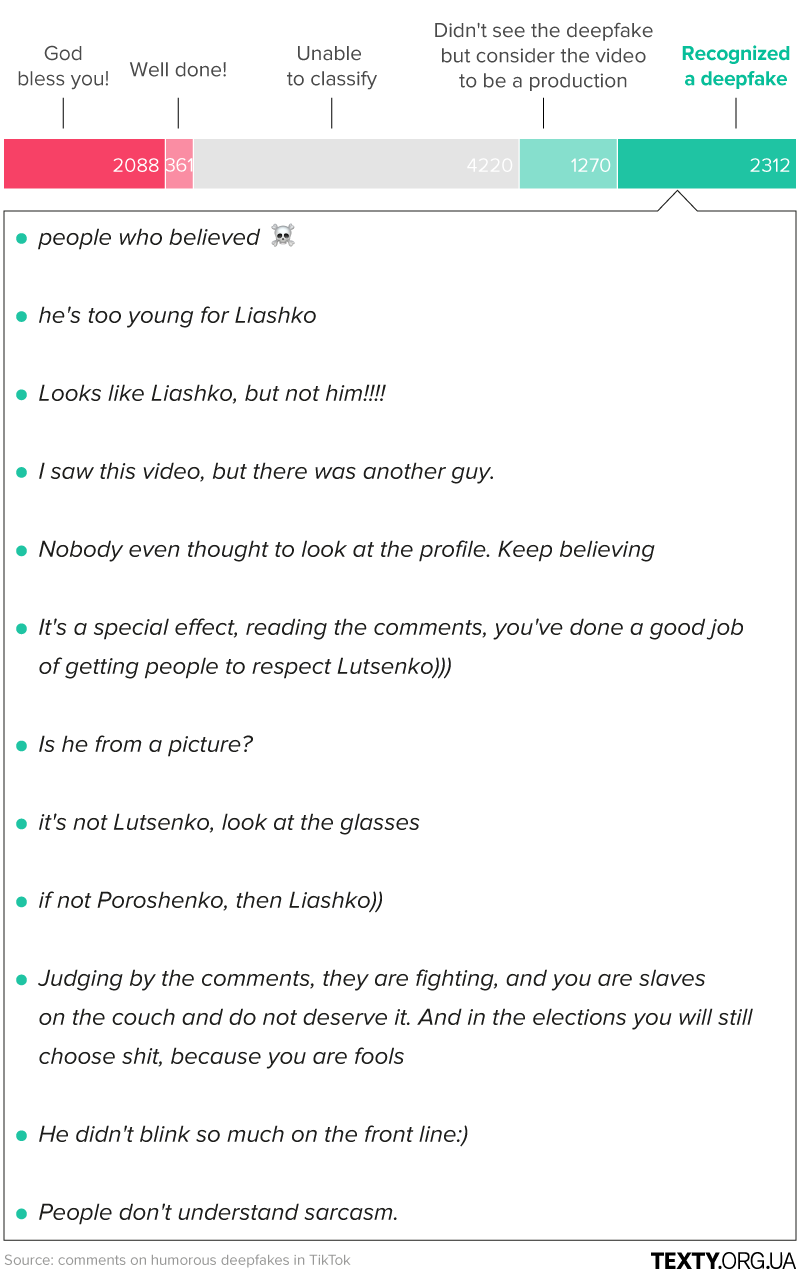

And now to the results: at least 20% of commenters (about 2000 accounts) wished fake Liashko and Lutsenko, a guardian angel, expressed their respect, thanked them from the bottom of their hearts, and admired their courage.

Another 4% (360) were more restrained in their admiration and wrote, "Well done!", "Beautiful!", "Respect!" could theoretically be addressed to both the main character and the author of the video, who made a good joke (although I personally tend to be inclined to the former).

In 41% of cases, it was impossible to tell for sure from the comment whether the commenter recognized the fake, so we left them in the "gray zone."

23% of commenters (2,312) understood everything and were simply surprised or ridiculed by the rest of the commenters for their inattention, stupidity, or naivety. And they thank the author for the good mood.

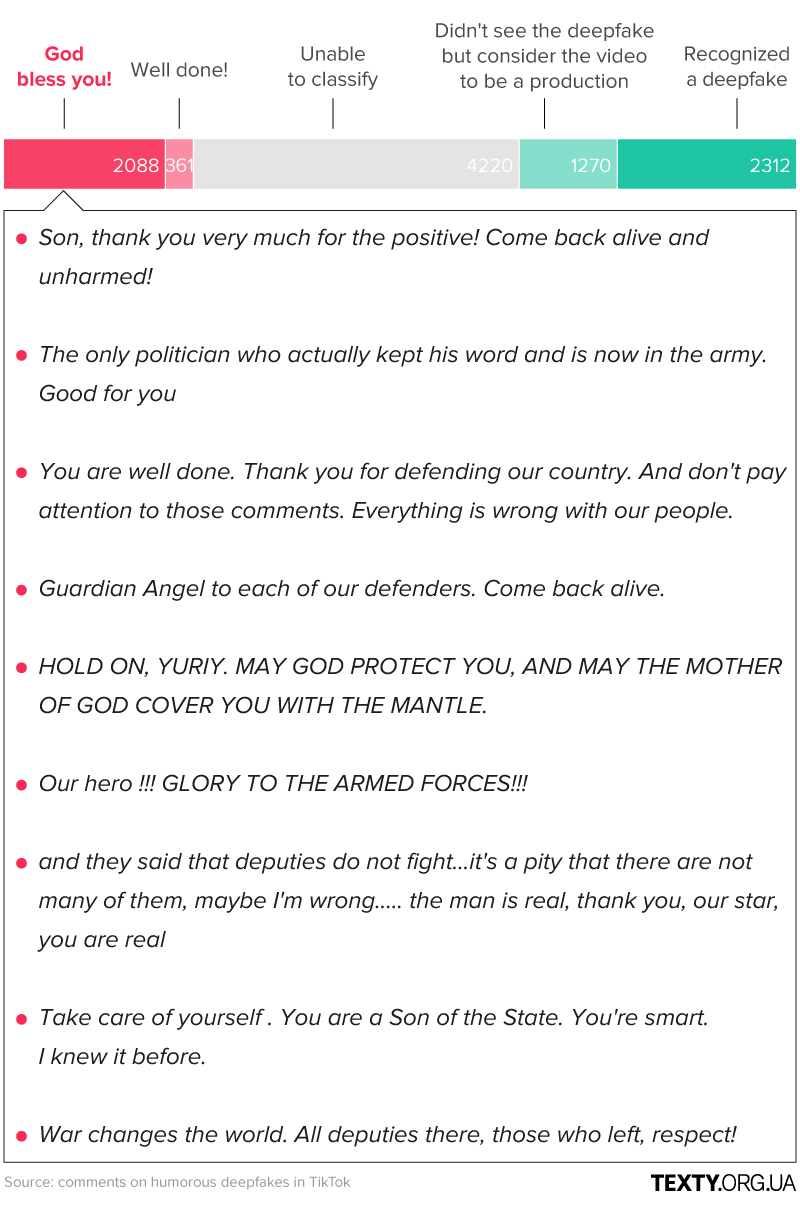

However, 12% of commenters (1270) were extremely critical of the video, even though they did not see the deepfake itself. They assumed that the trench was fake, accused the staging and acting, or even resorted to fact-checking: they noted that there was no sand in Bakhmut, wondered why there were no other soldiers in the video, drew attention to the wrong color of the tape or too good a manicure and clean hands, asked why all the trees in the front were intact, or simply reminded them of the exposure of the staging by Oleh Liashko in 2014, concluding that nothing had changed since then. Well, the fact-checking community can be proud. However, in this case, everything was in vain.

"I wonder if people really don't get it? 🤔 Or do they just troll in the comments?"

The term "troll" in the sense we use it to refer to online contributors comes from the distant 90s and Usenet forums. Even before the Internet existed as we know it today, people used to go to chat rooms and forums to troll newcomers for their ignorance. And in this case, the idea of trolls becomes especially relevant.

Because attempts to conclude whether a commenter has figured out a fake based on the comments will inevitably end in doubt that someone could seriously leave such a comment under a random tweet video, "Yurko Lutsenko at the front line in Bakhmut after combat."

"Thank you very much. You are an example for other high-ranking officials and MPs. You are a patriot of the Motherland. We bow low. Take care of yourself. Glory to our defenders!!!"

"Well, this is definitely trolling!" I thought in every second comment longer than a few grateful pathetic emojis. However, the sincerity of such comments is evidenced, at least by their number. And for just four videos, there must have been more than 2,000 trolls. That's why a simple explanation seems more likely: people simply believe what they see in a random feed of recommendations in their TikTok. Their desire to leave a comment often prevails over the desire to go to the channel's profile and make sure at a glance that they shouldn't believe it.

"Go to the profile, they are all fighting there"

However, it's easy to blame "ordinary people" for inattention. The "poor because they are stupid" principle is convenient only to absolve large commercial platforms of responsibility for promoting fake news, disinformation, or helping fraudsters make money on the inattention and gullibility of those "ordinary people" by being unscrupulous.

By the way, it seems that the same author runs not only a TikTok but also a YouTube channel. The channel was registered in 2019, but the publication of the oldest videos coincides with TikTok's activity. It has 72 thousand subscribers, 37 million views, and, most importantly, a detailed profile description:

"The videos are mostly humorous and created with the help of artificial intelligence. The characters in the content are not real, all events and circumstances are fictional. Enjoy your viewing. Glory to Ukraine."

We compare this to the profile description in the TikTok ("No biography") and reserve the right to add a paragraph about the difference in policies between different platforms.

In March of this year, YouTube updated its policy on combating video fraud and manipulation. The platform now requires content creators to state that they used AI to create videos. That's why we see the note "Modified or synthetic content" on the videos of this YouTube channel. TikTok seemed to follow the same path, requiring authors to label the use of AI, and in May, it announced that it would independently check and label artificial or modified content. None of the videos from the investigated channel bear such labeling.

"I really want to believe, but I don't, sorry 😞😞"

All joking aside, the massive spread of deepfake technology has very real consequences.

Already today, Elon Musk's digital wallets are helping fraudsters make billions of dollars in profits. The FBI warns Americans that cybercrime and fake AI-based scams are on the rise. And Deloitte joins the general concern by modeling possible scenarios of how much AI will increase fraud in the banking sector, and we are talking about billions of dollars annually.

However, even humorous dipshits can contribute to the so-called deepfake-related skepticism. The main idea is that the massive spread of this technology plays into the hands of disinformers and conspiracy theorists, as it undermines the very belief in facts and reality. The more examples of manipulation we encounter through fake or generated videos, the less we believe anything on the Internet. This makes it easier to fall into the trap of blurring reality.

As a result, accusations of fake photos or videos become a convenient and easy way to discredit their original source. This was confirmed by Irish and British researchers when, in 2022, they uploaded about 5,000 tweets about fakes around the Russo-Ukrainian war and demonstrated that most of the posts under study were not fakes themselves, but accusations of fake videos.

Of course, there are also optimistic findings: some studies, on the contrary, indicate that fake news and disinformation have contributed to an increase in trust in reliable sources and media. However, cases such as Russian propaganda with diplomatic fakes about "Vokha" suggest that the advantage seems to be on the optimistic side.